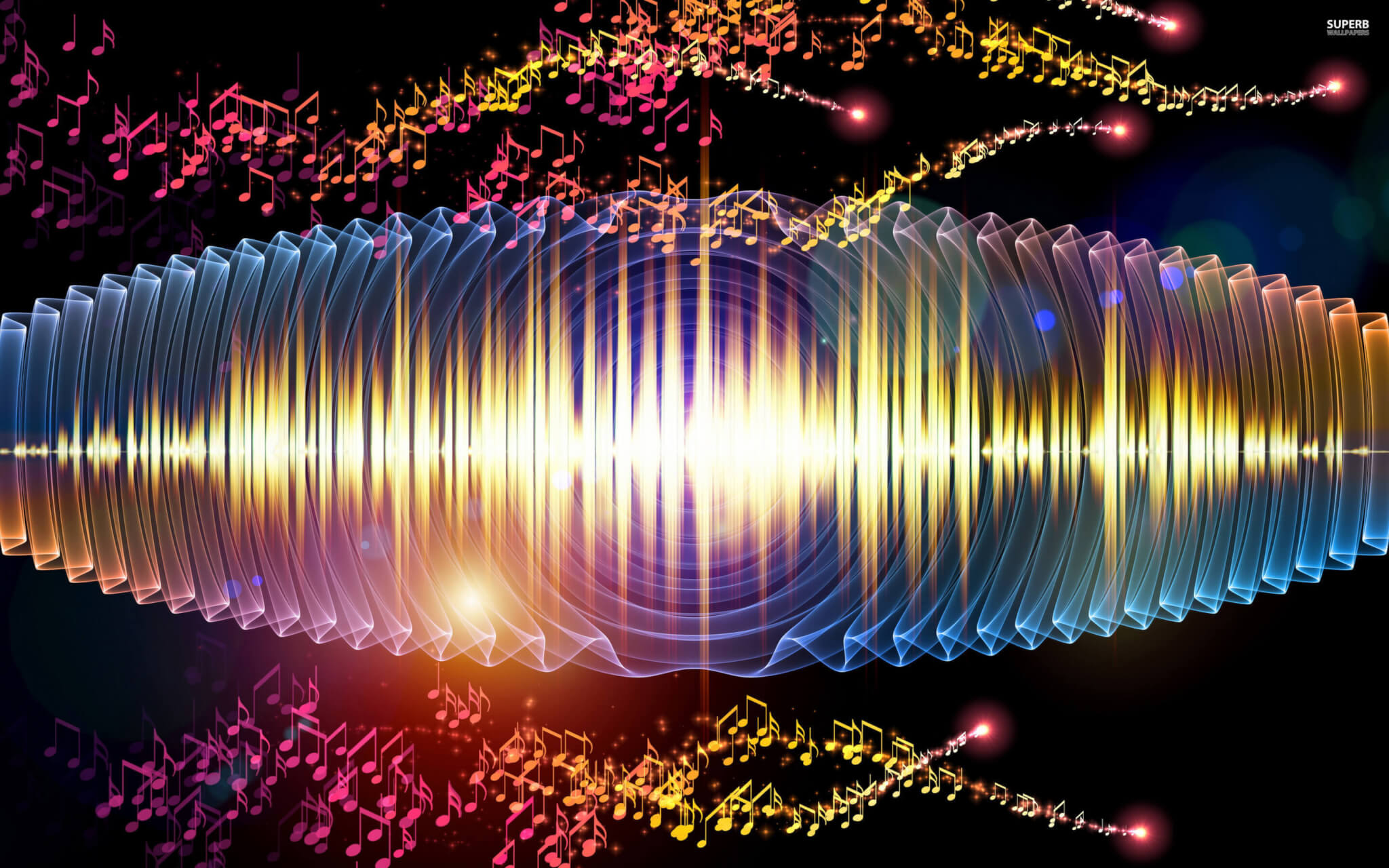

Google Is Using AI To Synthesize Incredible New Sounds

The tech giants are delving deep into neural audio synthesis.

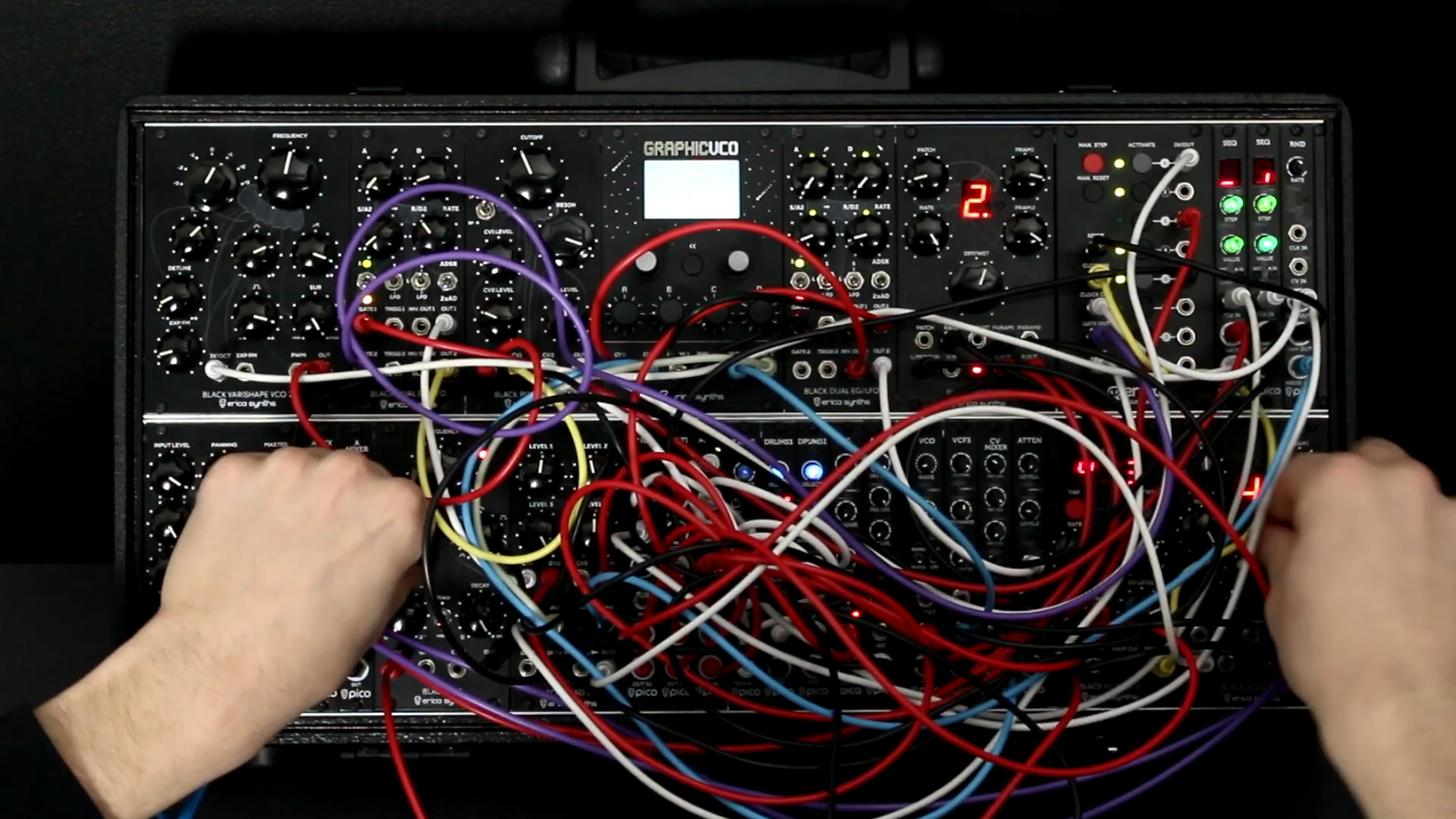

Google’s group of AI researchers, called Magenta, is working on ways to synthesize sounds without the use of physical components such as oscillators or circuit boards. Following successful experiments using machine learning to jam on digital synths, Magenta is developing NSynth (short for “neural synthesizer”), a program that is fed samples of real-life instruments like organs, flutes and basses and then distills them into crazy new synthesized sounds. NSynth’s results can sound garbled at times, but the effects of combining the analyzed results can be truly extraordinary.

The Magenta team is allegedly looking to expand the limits of how AI can be used to create new tools for musicians and to aid the creative process in original ways. Music critic Marc Weidenbaum has pointed out that “artistically, the project could yield some cool stuff, and because it’s Google, people will follow their lead.” Take a look at the latest updates on the Magenta project blog here, and listen to some audio clips below.

By loading the content from Soundcloud, you agree to Soundcloud’s privacy policy.

Learn more

By loading the content from Soundcloud, you agree to Soundcloud’s privacy policy.

Learn more

By loading the content from Soundcloud, you agree to Soundcloud’s privacy policy.

Learn more

Read more: Google’s new AI can use theory to make better music than you